Hello! This rather quick and dirty notebook showing how to get started on segmenting nuclei using a neural network in Keras.Download the dataset

The architecture used is the so-called U-Net, which is very common for image segmentation problems such as this. I believe they also have a tendency to work quite well even on small datasets.

Let's get started importing everything we need!

import os import sys import random import warnings import numpy as np import pandas as pd import matplotlib.pyplot as plt from tqdm import tqdm from itertools import chain from skimage.io import imread, imshow, imread_collection, concatenate_images from skimage.transform import resize from skimage.morphology import label from keras.models import Model, load_model from keras.layers import Input from keras.layers.core import Dropout, Lambda from keras.layers.convolutional import Conv2D, Conv2DTranspose from keras.layers.pooling import MaxPooling2D from keras.layers.merge import concatenate from keras.callbacks import EarlyStopping, ModelCheckpoint from keras import backend as K import tensorflow as tf # Set some parameters IMG_WIDTH = 128 IMG_HEIGHT = 128 IMG_CHANNELS = 3 TRAIN_PATH = 'D/input/stage1_train/' TEST_PATH = 'D/input/stage1_test/' warnings.filterwarnings('ignore', category=UserWarning, module='skimage') seed = 42 random.seed = seed np.random.seed = seed

Using TensorFlow backend.

# Get train and test IDs train_ids = next(os.walk(TRAIN_PATH))[1] test_ids = next(os.walk(TEST_PATH))[1]

Get the data

Let's first import all the images and associated masks. I downsample both the training and test images to keep things light and manageable, but we need to keep a record of the original sizes of the test images to upsample our predicted masks and create correct run-length encodings later on. There are definitely better ways to handle this, but it works fine for now!

# Get and resize train images and masks X_train = np.zeros((len(train_ids), IMG_HEIGHT, IMG_WIDTH, IMG_CHANNELS), dtype=np.uint8) Y_train = np.zeros((len(train_ids), IMG_HEIGHT, IMG_WIDTH, 1), dtype=np.bool) print('Getting and resizing train images and masks ... ') sys.stdout.flush() for n, id_ in tqdm(enumerate(train_ids), total=len(train_ids)): path = TRAIN_PATH + id_ img = imread(path + '/images/' + id_ + '.png')[:,:,:IMG_CHANNELS] img = resize(img, (IMG_HEIGHT, IMG_WIDTH), mode='constant', preserve_range=True) X_train[n] = img mask = np.zeros((IMG_HEIGHT, IMG_WIDTH, 1), dtype=np.bool) for mask_file in next(os.walk(path + '/masks/'))[2]: mask_ = imread(path + '/masks/' + mask_file) mask_ = np.expand_dims(resize(mask_, (IMG_HEIGHT, IMG_WIDTH), mode='constant', preserve_range=True), axis=-1) mask = np.maximum(mask, mask_) Y_train[n] = mask # Get and resize test images X_test = np.zeros((len(test_ids), IMG_HEIGHT, IMG_WIDTH, IMG_CHANNELS), dtype=np.uint8) sizes_test = [] print('Getting and resizing test images ... ') sys.stdout.flush() for n, id_ in tqdm(enumerate(test_ids), total=len(test_ids)): path = TEST_PATH + id_ img = imread(path + '/images/' + id_ + '.png')[:,:,:IMG_CHANNELS] sizes_test.append([img.shape[0], img.shape[1]]) img = resize(img, (IMG_HEIGHT, IMG_WIDTH), mode='constant', preserve_range=True) X_test[n] = img print('Done!')

Getting and resizing train images and masks ... 100%|██████████| 670/670 [02:30<00:00, 4.44it/s] Getting and resizing test images ... 100%|██████████| 65/65 [00:01<00:00, 51.95it/s] Done!

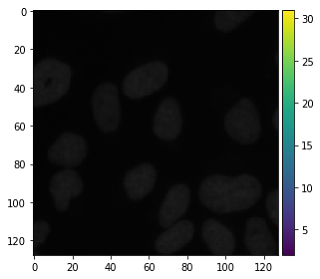

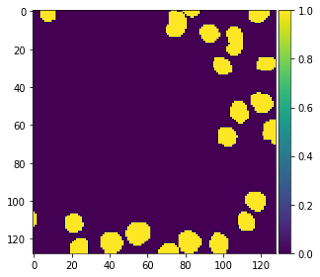

Let's see if things look all right by drawing some random images and their associated masks.

# Check if training data looks all right ix = random.randint(0, len(train_ids)) imshow(X_train[ix]) plt.show() imshow(np.squeeze(Y_train[ix])) plt.show()

Seems good!

Create our Keras metric

Now we try to define the mean average precision at the different intersection over union (IoU) thresholds metric in Keras. TensorFlow has a mean IoU metric, but it doesn't have any native support for the mean over multiple thresholds, so I tried to implement this. I'm by no means certain that this implementation is correct, though! Any assistance in verifying this would be most welcome!

# Define IoU metric def mean_iou(y_true, y_pred): prec = [] for t in np.arange(0.5, 1.0, 0.05): y_pred_ = tf.to_int32(y_pred > t) score, up_opt = tf.metrics.mean_iou(y_true, y_pred_, 2) K.get_session().run(tf.local_variables_initializer()) with tf.control_dependencies([up_opt]): score = tf.identity(score) prec.append(score) return K.mean(K.stack(prec), axis=0)

Build and train our neural network

Next, we build our U-Net model, loosely based on U-Net: Convolutional Networks for Biomedical Image Segmentation and very similar to this repo from the Kaggle Ultrasound Nerve Segmentation competition.

# Build U-Net model inputs = Input((IMG_HEIGHT, IMG_WIDTH, IMG_CHANNELS)) s = Lambda(lambda x: x / 255) (inputs) c1 = Conv2D(16, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (s) c1 = Dropout(0.1) (c1) c1 = Conv2D(16, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c1) p1 = MaxPooling2D((2, 2)) (c1) c2 = Conv2D(32, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (p1) c2 = Dropout(0.1) (c2) c2 = Conv2D(32, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c2) p2 = MaxPooling2D((2, 2)) (c2) c3 = Conv2D(64, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (p2) c3 = Dropout(0.2) (c3) c3 = Conv2D(64, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c3) p3 = MaxPooling2D((2, 2)) (c3) c4 = Conv2D(128, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (p3) c4 = Dropout(0.2) (c4) c4 = Conv2D(128, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c4) p4 = MaxPooling2D(pool_size=(2, 2)) (c4) c5 = Conv2D(256, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (p4) c5 = Dropout(0.3) (c5) c5 = Conv2D(256, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c5) u6 = Conv2DTranspose(128, (2, 2), strides=(2, 2), padding='same') (c5) u6 = concatenate([u6, c4]) c6 = Conv2D(128, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (u6) c6 = Dropout(0.2) (c6) c6 = Conv2D(128, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c6) u7 = Conv2DTranspose(64, (2, 2), strides=(2, 2), padding='same') (c6) u7 = concatenate([u7, c3]) c7 = Conv2D(64, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (u7) c7 = Dropout(0.2) (c7) c7 = Conv2D(64, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c7) u8 = Conv2DTranspose(32, (2, 2), strides=(2, 2), padding='same') (c7) u8 = concatenate([u8, c2]) c8 = Conv2D(32, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (u8) c8 = Dropout(0.1) (c8) c8 = Conv2D(32, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c8) u9 = Conv2DTranspose(16, (2, 2), strides=(2, 2), padding='same') (c8) u9 = concatenate([u9, c1], axis=3) c9 = Conv2D(16, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (u9) c9 = Dropout(0.1) (c9) c9 = Conv2D(16, (3, 3), activation='elu', kernel_initializer='he_normal', padding='same') (c9) outputs = Conv2D(1, (1, 1), activation='sigmoid') (c9) model = Model(inputs=[inputs], outputs=[outputs]) model.compile(optimizer='adam', loss='binary_crossentropy', metrics=[mean_iou]) model.summary()

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 128, 128, 3) 0

__________________________________________________________________________________________________

lambda_1 (Lambda) (None, 128, 128, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 128, 128, 16) 448 lambda_1[0][0]

__________________________________________________________________________________________________

dropout_1 (Dropout) (None, 128, 128, 16) 0 conv2d_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 128, 128, 16) 2320 dropout_1[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 64, 64, 16) 0 conv2d_2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 64, 64, 32) 4640 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

dropout_2 (Dropout) (None, 64, 64, 32) 0 conv2d_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 64, 64, 32) 9248 dropout_2[0][0]

__________________________________________________________________________________________________

max_pooling2d_2 (MaxPooling2D) (None, 32, 32, 32) 0 conv2d_4[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 32, 32, 64) 18496 max_pooling2d_2[0][0]

__________________________________________________________________________________________________

dropout_3 (Dropout) (None, 32, 32, 64) 0 conv2d_5[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 32, 32, 64) 36928 dropout_3[0][0]

__________________________________________________________________________________________________

max_pooling2d_3 (MaxPooling2D) (None, 16, 16, 64) 0 conv2d_6[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 16, 16, 128) 73856 max_pooling2d_3[0][0]

__________________________________________________________________________________________________

dropout_4 (Dropout) (None, 16, 16, 128) 0 conv2d_7[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 16, 16, 128) 147584 dropout_4[0][0]

__________________________________________________________________________________________________

max_pooling2d_4 (MaxPooling2D) (None, 8, 8, 128) 0 conv2d_8[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 8, 8, 256) 295168 max_pooling2d_4[0][0]

__________________________________________________________________________________________________

dropout_5 (Dropout) (None, 8, 8, 256) 0 conv2d_9[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 8, 8, 256) 590080 dropout_5[0][0]

__________________________________________________________________________________________________

conv2d_transpose_1 (Conv2DTrans (None, 16, 16, 128) 131200 conv2d_10[0][0]

__________________________________________________________________________________________________

concatenate_1 (Concatenate) (None, 16, 16, 256) 0 conv2d_transpose_1[0][0]

conv2d_8[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 16, 16, 128) 295040 concatenate_1[0][0]

__________________________________________________________________________________________________

dropout_6 (Dropout) (None, 16, 16, 128) 0 conv2d_11[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 16, 16, 128) 147584 dropout_6[0][0]

__________________________________________________________________________________________________

conv2d_transpose_2 (Conv2DTrans (None, 32, 32, 64) 32832 conv2d_12[0][0]

__________________________________________________________________________________________________

concatenate_2 (Concatenate) (None, 32, 32, 128) 0 conv2d_transpose_2[0][0]

conv2d_6[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 32, 32, 64) 73792 concatenate_2[0][0]

__________________________________________________________________________________________________

dropout_7 (Dropout) (None, 32, 32, 64) 0 conv2d_13[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 32, 32, 64) 36928 dropout_7[0][0]

__________________________________________________________________________________________________

conv2d_transpose_3 (Conv2DTrans (None, 64, 64, 32) 8224 conv2d_14[0][0]

__________________________________________________________________________________________________

concatenate_3 (Concatenate) (None, 64, 64, 64) 0 conv2d_transpose_3[0][0]

conv2d_4[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 64, 64, 32) 18464 concatenate_3[0][0]

__________________________________________________________________________________________________

dropout_8 (Dropout) (None, 64, 64, 32) 0 conv2d_15[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 64, 64, 32) 9248 dropout_8[0][0]

__________________________________________________________________________________________________

conv2d_transpose_4 (Conv2DTrans (None, 128, 128, 16) 2064 conv2d_16[0][0]

__________________________________________________________________________________________________

concatenate_4 (Concatenate) (None, 128, 128, 32) 0 conv2d_transpose_4[0][0]

conv2d_2[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 128, 128, 16) 4624 concatenate_4[0][0]

__________________________________________________________________________________________________

dropout_9 (Dropout) (None, 128, 128, 16) 0 conv2d_17[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 128, 128, 16) 2320 dropout_9[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 128, 128, 1) 17 conv2d_18[0][0]

==================================================================================================

Total params: 1,941,105

Trainable params: 1,941,105

Non-trainable params: 0

__________________________________________________________________________________________________

Next, we fit the model on the training data, using a validation split of 0.1. We use a small batch size because we have so little data. I recommend using checkpointing and early stopping when training your model. I won't do it here to make things a bit more reproducible (although it's very likely that your results will be different anyway). I'll just train for 10 epochs, which takes around 10 minutes in the Kaggle kernel with the current parameters.

Fit model earlystopper = EarlyStopping(patience=5, verbose=1) checkpointer = ModelCheckpoint('model-dsbowl2018-1.h5', verbose=1, save_best_only=True) results = model.fit(X_train, Y_train, validation_split=0.1, batch_size=16, epochs=50, callbacks=[earlystopper, checkpointer])

Train on 603 samples, validate on 67 samples Epoch 1/50 592/603 [============================>.] - ETA: 2s - loss: 0.4573 - mean_iou: 0.4127 Epoch 00001: val_loss improved from inf to 0.25262, saving model to model-dsbowl2018-1.h5 603/603 [==============================] - 132s 218ms/step - loss: 0.4536 - mean_iou: 0.4131 - val_loss: 0.2526 - val_mean_iou: 0.4355 Epoch 2/50 592/603 [============================>.] - ETA: 2s - loss: 0.2107 - mean_iou: 0.4746 Epoch 00002: val_loss improved from 0.25262 to 0.14263, saving model to model-dsbowl2018-1.h5 603/603 [==============================] - 132s 220ms/step - loss: 0.2099 - mean_iou: 0.4754 - val_loss: 0.1426 - val_mean_iou: 0.5222 Epoch 3/50 592/603 [============================>.] - ETA: 2s - loss: 0.1473 - mean_iou: 0.5562 Epoch 00003: val_loss improved from 0.14263 to 0.10655, saving model to model-dsbowl2018-1.h5 603/603 [==============================] - 131s 217ms/step - loss: 0.1463 - mean_iou: 0.5568 - val_loss: 0.1065 - val_mean_iou: 0.5894 Epoch 4/50................ Epoch 37/50 592/603 [============================>.] - ETA: 2s - loss: 0.0666 - mean_iou: 0.8283 Epoch 00037: val_loss did not improve 603/603 [==============================] - 118s 196ms/step - loss: 0.0663 - mean_iou: 0.8283 - val_loss: 0.0558 - val_mean_iou: 0.8289 Epoch 38/50 592/603 [============================>.] - ETA: 2s - loss: 0.0658 - mean_iou: 0.8296 Epoch 00038: val_loss did not improve 603/603 [==============================] - 117s 193ms/step - loss: 0.0657 - mean_iou: 0.8296 - val_loss: 0.0562 - val_mean_iou: 0.8302 Epoch 00038: early stopping

All right, looks good! The loss seems to be a bit erratic, though. I'll leave it to you to improve the model architecture and parameters!

Make predictions

Let's make predictions both on the test set, the val set and the train set (as a sanity check). Remember to load the best-saved model if you've used early stopping and checkpointing.

# Predict on train, val and test model = load_model('model-dsbowl2018-1.h5', custom_objects={'mean_iou': mean_iou}) preds_train = model.predict(X_train[:int(X_train.shape[0]*0.9)], verbose=1) preds_val = model.predict(X_train[int(X_train.shape[0]*0.9):], verbose=1) preds_test = model.predict(X_test, verbose=1) # Threshold predictions preds_train_t = (preds_train > 0.5).astype(np.uint8) preds_val_t = (preds_val > 0.5).astype(np.uint8) preds_test_t = (preds_test > 0.5).astype(np.uint8) # Create list of upsampled test masks preds_test_upsampled = [] for i in range(len(preds_test)): preds_test_upsampled.append(resize(np.squeeze(preds_test[i]), (sizes_test[i][0], sizes_test[i][1]), mode='constant', preserve_range=True))

603/603 [==============================] - 38s 63ms/step 67/67 [==============================] - 4s 61ms/step 65/65 [==============================] - 4s 62ms/step

# Perform a sanity check on some random training samples ix = random.randint(0, len(preds_train_t)) imshow(X_train[ix]) plt.show() imshow(np.squeeze(Y_train[ix])) plt.show() imshow(np.squeeze(preds_train_t[ix])) plt.show()

The model is at least able to fit the training data! Certainly a lot of room for improvement even here, but a decent start. How about the validation data?

# Perform a sanity check on some random validation samples ix = random.randint(0, len(preds_val_t)) imshow(X_train[int(X_train.shape[0]*0.9):][ix]) plt.show() imshow(np.squeeze(Y_train[int(Y_train.shape[0]*0.9):][ix])) plt.show() imshow(np.squeeze(preds_val_t[ix])) plt.show()

Not too shabby! Definitely needs some more training and tweaking.

Encode and submit our results

Now it's time to submit our results.

def rle_encoding(x): dots = np.where(x.T.flatten() == 1)[0] run_lengths = [] prev = -2 for b in dots: if (b>prev+1): run_lengths.extend((b + 1, 0)) run_lengths[-1] += 1 prev = b return run_lengths def prob_to_rles(x, cutoff=0.5): lab_img = label(x > cutoff) for i in range(1, lab_img.max() + 1): yield rle_encoding(lab_img == i)

Let's iterate over the test IDs and generate run-length encodings for each separate mask identified by skimage ...

new_test_ids = [] rles = [] for n, id_ in enumerate(test_ids): rle = list(prob_to_rles(preds_test_upsampled[n])) rles.extend(rle) new_test_ids.extend([id_] * len(rle))

... and then finally create our submission!

# Create submission DataFrame sub = pd.DataFrame() sub['ImageId'] = new_test_ids sub['EncodedPixels'] = pd.Series(rles).apply(lambda x: ' '.join(str(y) for y in x)) sub.to_csv('sub-dsbowl2018-1.csv', index=False)

You should easily be able to stabilize and improve the results just by changing a few parameters, tweaking the architecture a little bit and training longer with early stopping.

Have fun!

RECOMMENDED

2 Comments

I couldn't sign in to Kaggle, so I'm not able to download dataset. could you please send URL in another host?

ReplyDeleteThis iis a great blog

ReplyDelete